TCP: Context-Aware Pooling via Top-k% Activation Selection

초록

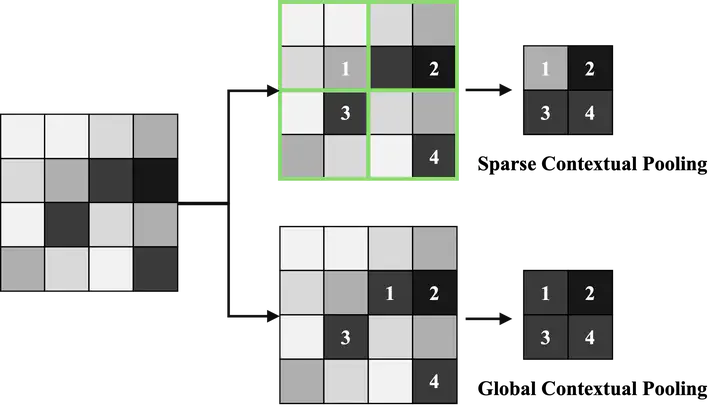

Pooling is a fundamental operation in convolutional neural networks (CNN), leading to spatial reduction and hierarchical abstraction. However, conventional pooling methods such as max and average pooling operate locally and often fail to capture semantically meaningful features across the broader context of an image with under- or over-estimation. The inherent limitation hampers performance in vision tasks demanding both precise localization and global interpretation. To alleviate this, we introduce Top-K% Contextual Pooling (TCP), a novel pooling framework designed to retain the most informative activations based on the contextual importance. TCP consists of two variants (1) Sparse Contextual Pooling performs top-k selection within local kernel windows, and (2) Global Contextual Pooling identifies top-k% activations across the entire feature map. Given a kernel size and target output resolution, TCP automatically determines stride values and reconstructs the output through a deterministic process that preserves spatial coherence without additional learnable parameters. We evaluate TCP on a wide range of computer vision tasks including image classification, object detection, object tracking, semantic segmentation, and generation. Experimental results demonstrate consistent improvements in accuracy and robustness in vision tasks. Beyond performance gains, TCP provides a mechanism for interpreting model behavior by revealing how high-activation regions evolve across layers in the CNN pyramid. The hierarchical interpretation supports efficient representation while enabling layer-wise insight into the attention and decision patterns.