Publication

Fine-Grained Binary Object Segmentation in Remote Sensing Imagery via Path-Selective Test-Time Adaptation

Kyungsu Lee, Haeyun Lee, Juhum Park, and Jae Youn Hwang. "Fine-Grained Binary Object Segmentation in Remote Sensing Imagery via Path-Selective Test-Time Adaptation," IEEE Transactions on Geoscience and Remote Sensing (TGRS) , 2024.

Abstract

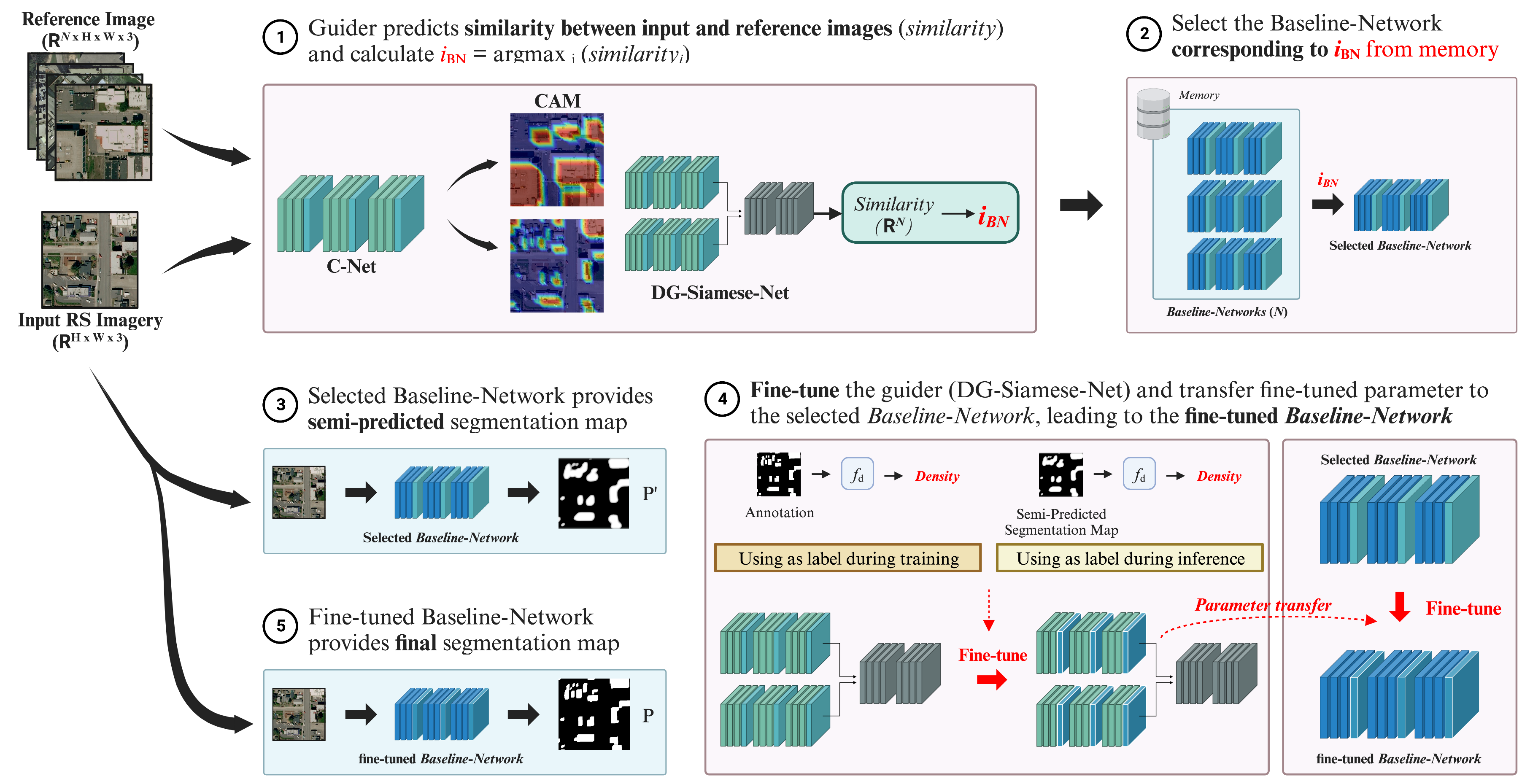

For several decades, the significance of geospatial object segmentation in remote sensing (RS) images has been emphasized for both scientific and industrial purposes. Object segmentation plays a pivotal role in the analysis of urban and rural area expansion, as well as in advancing sustainable development within the realm of RS. Deep learning (DL)-based segmentation methodologies, overcoming the limitations of the conventional vision-based analysis, have yielded precise predictions by utilizing convolutional neural networks (CNNs). However, CNNs classify images at the pixel level and generate outputs based on probability distributions derived from the SoftMax function. This approach precludes the reflection of morphological properties, such as shape and object density, during predictions in RS imagery, leading to imprecise results. In addition, due to the intrinsic attributes of probability-based segmentation, fine-grained segmentation may not be achieved, leading to coarse predictions in the boundaries of geospatial objects. To address this issue, this article introduces a novel DL framework, the density-based guide network (DG-Net), which incorporates the density of segmentation targets into pixel-wise classification through a test-time adaptation learning methodology. DG-Net first discerns the density of segmentation targets in the input images, then fine-tunes the baseline network to reflect this density, thereby generating precise segmentation outputs. The effectiveness of DG-Net is demonstrated through various multitarget segmentation benchmarks in RS imagery. Experimental results demonstrate the superior performance of the DG-Net in object segmentation when compared to state-of-the-art (SotA) models across numerous aerial image and satellite image datasets.