Boundary-oriented Binary Building Segmentation Model with Two Scheme Learning for Aerial Images

초록

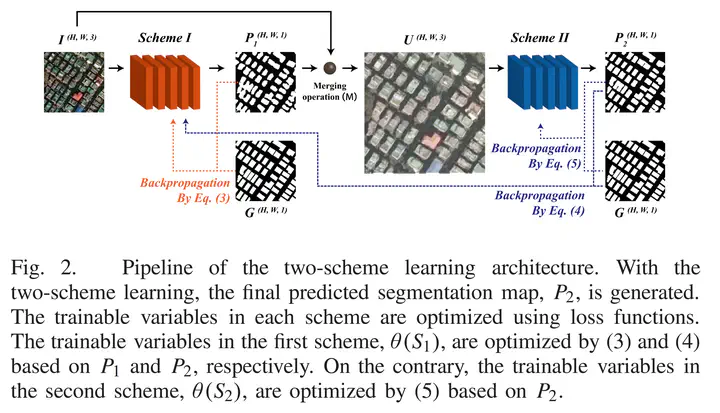

Various deep learning-based segmentation models have been developed to segment buildings in aerial images. However, the segmentation maps predicted by the conventional convolutional neural network-based methods cannot accurately determine the shapes and boundaries of segmented buildings. In this article, to improve the prediction accuracy for the boundaries and shapes of segmented buildings in aerial images, we propose the boundary-oriented binary building segmentation model (B3SM). To construct the B3SM for boundary-enhanced semantic segmentation, we present two-scheme learning (Schemes I and II), which uses the upsampling interpolation method (USIM) as a new operator and a boundary-oriented loss function (B-Loss). In Scheme I, a raw input image is processed and transformed into a presegmented map. In Scheme II, the presegmented map from Scheme I is transformed into a more fine-grained representation. To connect these two schemes, we use the USIM operator. In addition, the novel B-Loss function is implemented in B3SM to extract the features of the boundaries of buildings effectively. To perform quantitative evaluation of the shapes and boundaries of segmented buildings generated by B3SM, we develop a new metric called the boundary-oriented intersection over union (B-IoU). After evaluating the effectiveness of two-scheme learning, USIM, and B-Loss for building segmentation, we compare the performance of B3SM to those of other state-of-the-art methods using public and custom datasets. The experimental results demonstrate that the B3SM outperforms other state-of-the-art models, resulting in more accurate shapes and boundaries for segmented buildings in aerial images.